This post first appeared on Linux.com

By Amar Kapadia

In this article series, we have been discussing the Understanding OPNFV book. Previously, we talked about chapters 1-5 via an introduction to network functions virtualization (NFV), the role of OPNFV in network transformation, and how OPNFV integrates and enhances upstream projects. We continue our series with a look at chapters 6 and 7, that provide in-depth insight into the OPNFV DevOps toolchain, hardware labs, continuous integration (CI) pipeline and deployment tools (installers).

As mentioned previously, OPNFV integrates a number of upstream projects along with code contributions from the OPNFV community. To integrate and test these projects and contributions in an automated manner, the OPNFV project uses a variety of DevOps tools, hardware labs and a sophisticated CI pipeline. In fact, there is no better way for a telecom operator to absorb the principles of DevOps than by joining OPNFV.

Chapter 6 of the book starts by discussing each of the various software and cloud-based tools used by OPNFV for DevOps:

- Collaboration: JIRA/Confluence

- Source code management and code review: Git, Gerrit, and GitHub

- CI/software automation: Jenkins

- Artifact repository: Google cloud storage and Docker hub

Here is an excerpt from the book discussing Gerrit:

Code Reviews – Gerrit

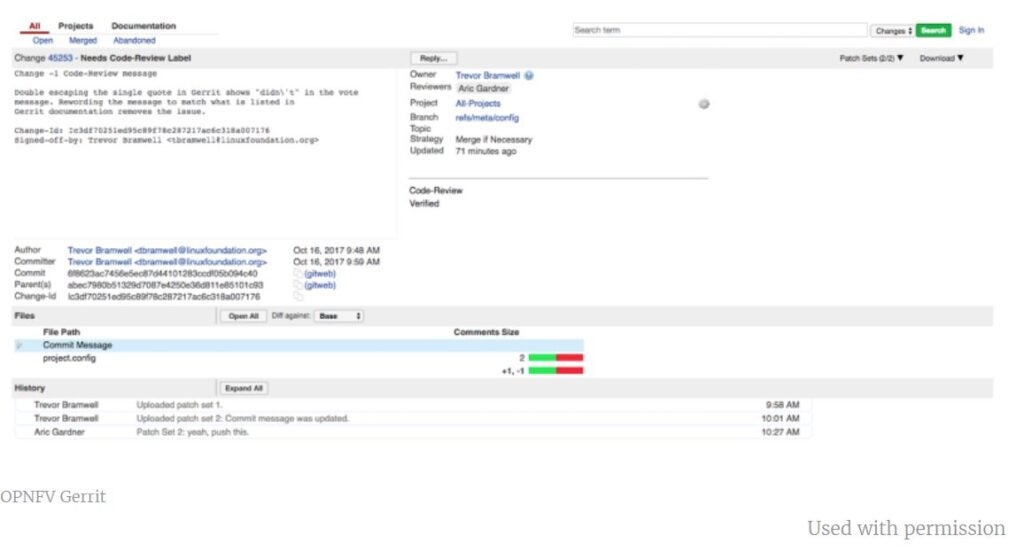

Committing to master requires an approval process, and this process is managed through a tool called Gerrit. Gerrit is an open source web-based code review tool developed by Google. All changes pushed by contributors using a git push or git review command are reviewed in Gerrit by a set of reviewers, who view and inspect the patch. Reviewers also get to see the results of a continuous integration (CI) build and automated verify test run. Reviewers provide scores of +2, +1, -1 or -2. A +2 is a definite accept, while a -2 is a definite reject. A +1 or -1 may result in the change being accepted, rejected or sent back for changes.

OPNFV Gerrit

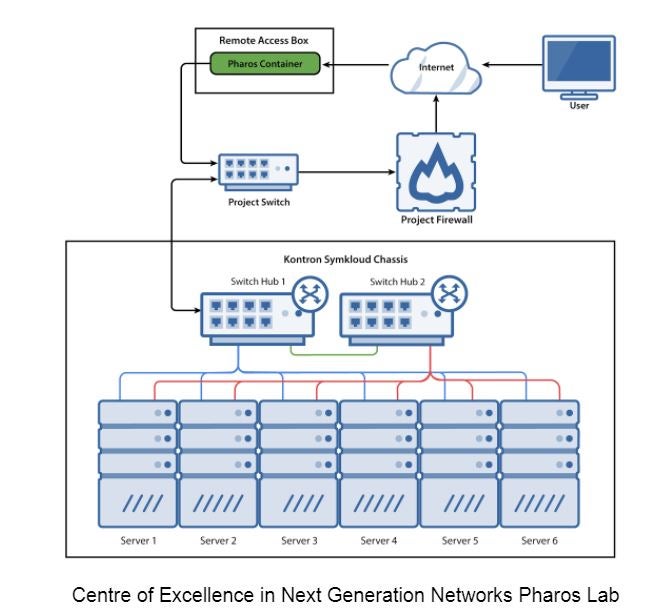

The chapter then describes the hardware labs used for automated integration and testing jobs. OPNFV has defined a standardized set of hardware, called a Pharos lab, consisting of 6 nodes and associated switches to automatically deploy OPNFV software by using the CI pipeline. The Pharos lab concept has been very successful with 16 labs distributed all around the world working seamlessly.

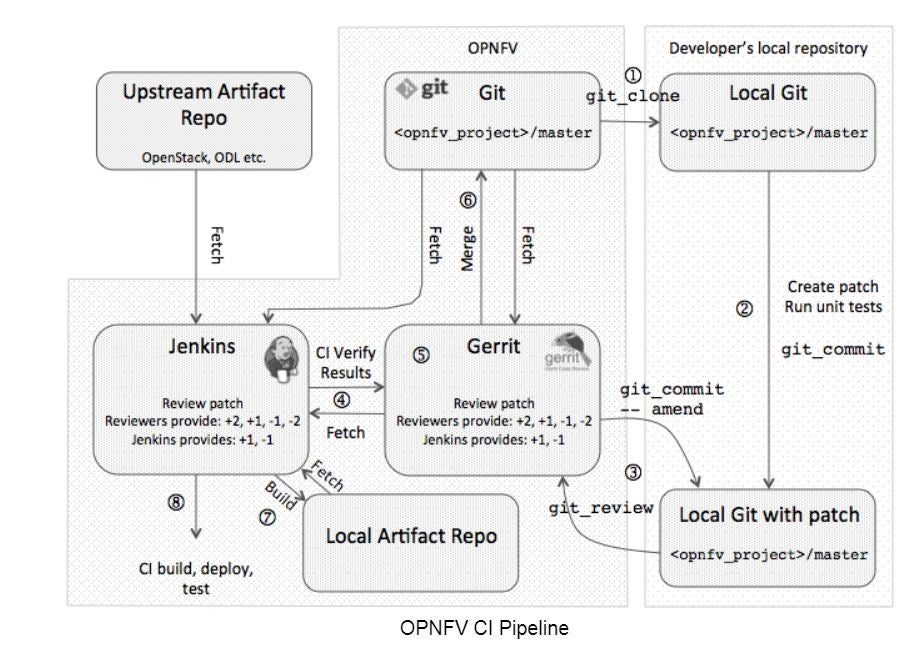

Chapter 6 continues by describing the CI pipeline in detail, where changes in upstream projects or community code contributions trigger integration jobs and specific time-durations (such as daily, weekly) trigger testing jobs. The CI pipeline diagram from the book is shown below:

Chapter 7 start by exploring the concept of OPNFV scenarios. Since OPNFV allows for multiple choices for different software layers, numerous permutations are possible. In addition to the different upstream projects described in the previous blog, OPNFV also allows for diversity in installers. The list of scenarios represents a subset of all possible permutations; effectively each scenario is a tested reference architecture. Examples of scenarios are:

- OpenStack + ODL + L3 FD.io + High Availability (HA) using the Apex installer, or

- OpenStack + OpenContrail + HA using the JOID installer

The OPNFV Danube release had 55 scenarios. However, if we ignore non-HA scenarios and the specific installer used, we are down to 21 distinct usable scenarios.

The chapter continues by providing an overview of the 4 major installers used in the Danube release: Apex, Compass, Fuel and JOID, and ends with a discussion of additional deployment related projects such as Daisy (a new installer), IPv6, Parser, ARMBand (to run OPNFV on ARM) and FastDataStacks (FD.io with OPNFV).

Want to learn more? You can check out the previous blog post that discussed the broader NFV transformation complexities and how OPNFV solves an important piece of the puzzle, download the Understanding OPNFV ebook in PDF (in English or Chinese), or order a printed version on Amazon.